EDEN: multimodal synthetic dataset of Enclosed garDEN scenes

Hoang-An Le, Partha Das, Thomas Mensink, Sezer Karaoglu, Theo Gevers

University of Amsterdam

Reading time ~6 minutes

A garden enclosed, my sister, my bride, a garden enclosed, a fountain sealed!

Your branches are a grove of pomegranates, with all choicest fruits

(Songs of Songs, 4:12-13)

Abstract

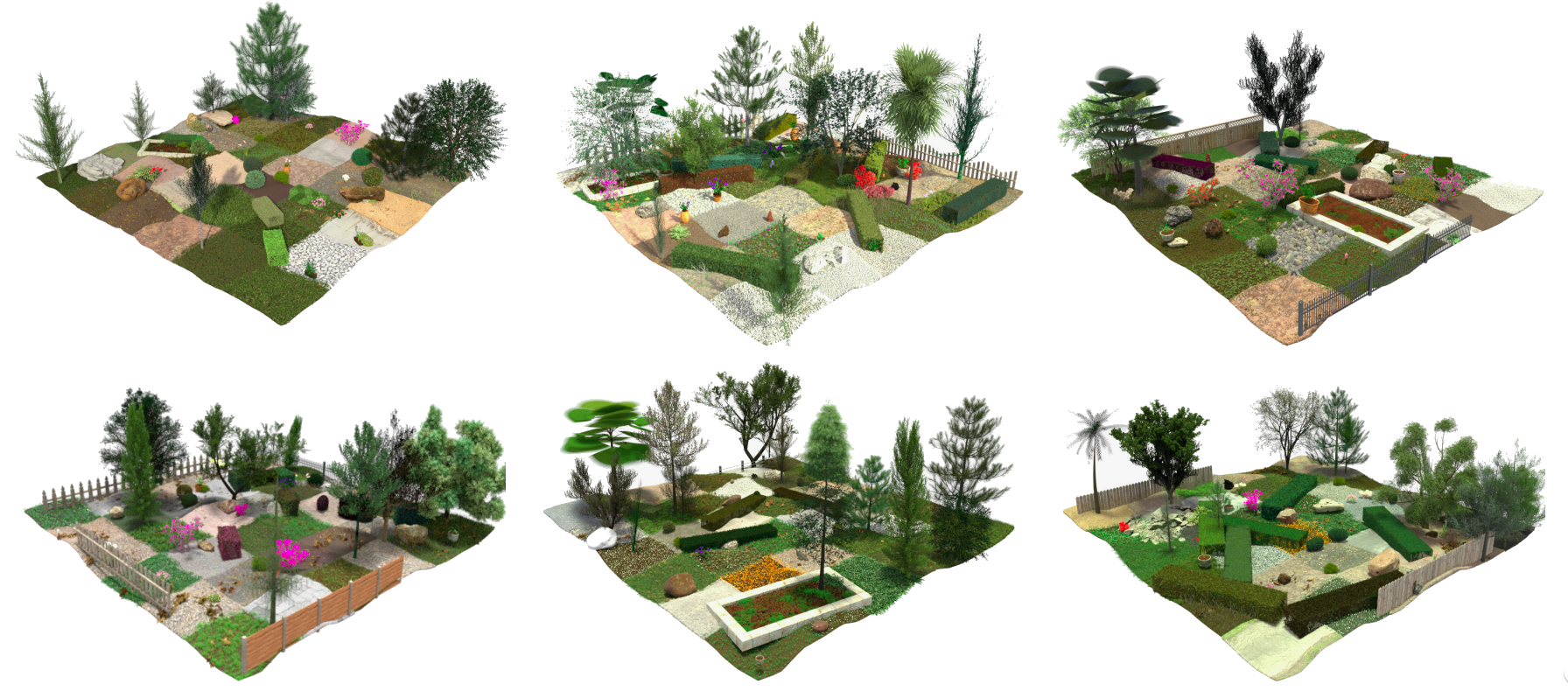

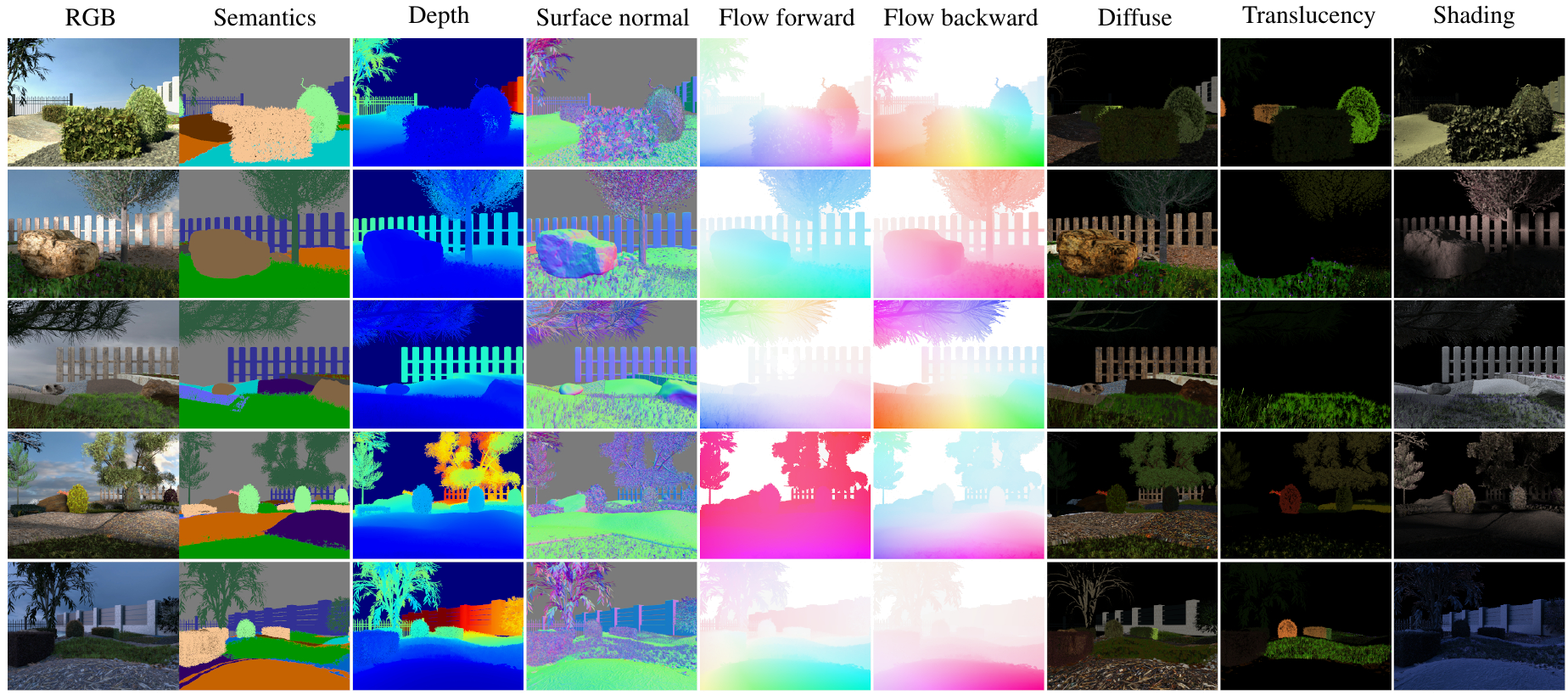

Multimodal large-scale datasets for outdoor scenes are mostly designed for urban driving problems. The scenes are highly structured and semantically different from scenarios seen in nature-centered scenes such as gardens or parks. To promote machine learning methods for nature-oriented applications, such as agriculture and gardening, we propose the multimodal synthetic dataset for Enclosed garDEN scenes (EDEN). The dataset features more than 300K images captured from more than 100 garden models. Each image is annotated with various low/high-level vision modalities, including semantic segmentation, depth, surface normals, intrinsic colors, and optical flow. Experimental results on the state-of-the-art methods for semantic segmentation and monocular depth prediction, two important tasks in computer vision, show positive impact of pre-training deep networks on our dataset for unstructured natural scenes.

Data

| Data | Full set | Sample set |

|---|---|---|

| Statistics & Splits | 26M | |

| Camera poses | 197M | |

| Light information | 131M | |

| RGB | 333G | 11G |

| Segmentation | 13G | 439M |

| Depth | 241G | 7.7G |

| Surface Normal | 130G | 4.6G |

| Intrinsics | 440G | 14G |

Segmentation

- The instance labels

*_Inst.pngare encoded in 32-bit integer png images, using the following function

instance_label = semantic_label * 100 + instance_number

- Semantic labels follow the full TrimBot2020 annotation set, while the 3DRMS challenge and experiments done in the paper follow the reduced label set.

| Label full | Label reduced | Name | Label full | Name |

|---|---|---|---|---|

| 0 | 0 | void | ||

| 2 | 1 | grass | ||

| 1 | 2 | ground | 3 | dirt |

| 4 | gravel | |||

| 5 | mulch | |||

| 6 | pebble | |||

| 7 | woodchip | |||

| 8 | 3 | pavement | ||

| 10 | 4 | hedge | ||

| 20 | 5 | topiary | ||

| 30 | 6 | rose | 31 | rose stem |

| 32 | rose branch | |||

| 33 | rose leaf | |||

| 34 | rose bud | |||

| 35 | rose flower | |||

| 100 | 7 | obstacle | 103 | fence |

| 104 | step | |||

| 105 | flowerpot | |||

| 106 | stone | |||

| 102 | 8 | tree | ||

| 220 | 9 | background | 223 | sky |

- To convert from full annotation set to the reduced set, the python mapping code is provided below

full2reduced = {2: 1, # grass

1: 2, 3: 2, 4: 2, 5: 2, 6:2, 7:2, # ground

8: 3, #

10:4, # hedge

20: 5, # topiary

30: 6, 31: 6, 32: 6, 33: 6, 34: 6, 35: 6, # flower

100: 7, 103: 7, 104: 7, 105: 7, 106: 7, # obstacle

102: 8, # tree

220: 9, 223: 9, # background

0: 0 # unknown

}

Compared to 3DRMS data

Some of the EDEN models has been used for the challenge at ECCV’2018 workshop of 3D Reconstruction meets Semantics by @TrimBot2020, available at 3DRMS challenge. The subsequent scenes from these models (see below) have been re-rendered and have their scene-number changed. The general image appearances basically stay the same as in the 3DRMS-challenge data yet not a perfect match. Some small differences are present from the random particle models (such as leaf, grass particles, etc.) due to different random seeds. Therefore, mixing the modalities of the same images between the 2 datases are is inadvisable.

| EDEN | 3DRMS |

|---|---|

| 0001 | 0001 |

| 0026 | 0026 |

| 0033 | 0033 |

| 0039 | 0039 |

| 0042 | 0043 |

| 0053 | 0052 |

| 0068 | 0069 |

| 0127 | 0128 |

| 0160 | 0160 |

| 0192 | 0192 |

| 0223 | 0224 |

| 0288 | 0288 |

Paper

Presentation

Citation

If you find the material useful please consider citing our work

@inproceedings{le21wacv,

author = {Le, Hoang{-}An and Das, Partha and Mensink, Thomas and Karaoglu, Sezer and Gevers, Theo},

title = {{EDEN: Multimodal Synthetic Dataset of Enclosed garDEN Scenes}},

booktitle = {Proceedings of the IEEE/CVF Winter Conference of Applications on Computer Vision (WACV)},

year = {2021},

}